Low latency programming: Memory model and atomic operations.

The most important feature in modern C++: new awareness multithreading memory model.

1. Memory model

All data in c++ is made up by objects. The C++ standards defines an object as a region of storage, so whatever the object type, it’s stored at one or more memory locations. Let’s visualize an object into memory view.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

Object in user view Memory location

┌──────────────────────────────────┐ ┌───────────────────────┐

│ struct my_data │ │ i │

│ { │ ├───────────────────────┤

│ int i; │ │ d │

│ double d; │ ├───────────────────────┤

│ unsigned bf1:10; │ │ bf1 │ bf2 │

│ int bf2:25; │ ├───────────────────────┤

│ int bf3:0; │===========>│ bf3 │

│ int bf4:9; │ ├───────────────────────┤

│ int i2; │ │ bf4 │

│ char c1, c2; │ ├───────────────────────┤

│ std::string s; │ │ i2 │

│ }; │ ├───────────────────────┤

└──────────────────────────────────┘ │ c1 │

├───────────────────────┤

│ c2 │

├───────────────────────┤

│ s │

└───────────────────────┘

Methods in C++ are organized in a different way, there are two types of methods: non-virtual and virtual methods. non virtual methods are treated as normal functions by the compiler:

1

2

3

4

5

6

7

8

9

10

┌────────────────────┐

│ struct my_object │

│ { │

│ int x; │ ┌───────Text memory segment───────┐

│ void add(int y) │=====>│ void add(my_object *obj, int y) │

│ { │ │ { │

│ x += y; │ │ obj->x += y; │

│ } │ │ } │

│ }; │ └─────────────────────────────────┘

└────────────────────┘

Meanwhile virtual methods require extra memory to look at the V-Table at runtime. The vptr is added to the object’s memory location by the compiler automatically. It holds pointers to the actual method address.

1

2

3

4

5

6

7

8

9

Memory

Location

┌──────────────────────────────────┐ ┌──────────┐ ┌Text memory segment─┐

│ struct my_object │===>│ vptr │==> │ method(int y) │

│ { │ ├──────────┤ │ {...} │

│ int x; │ │ x(int) │ └────────────────────┘

│ virtual void method(int y); │ └──────────┘

│ }; │

└──────────────────────────────────┘

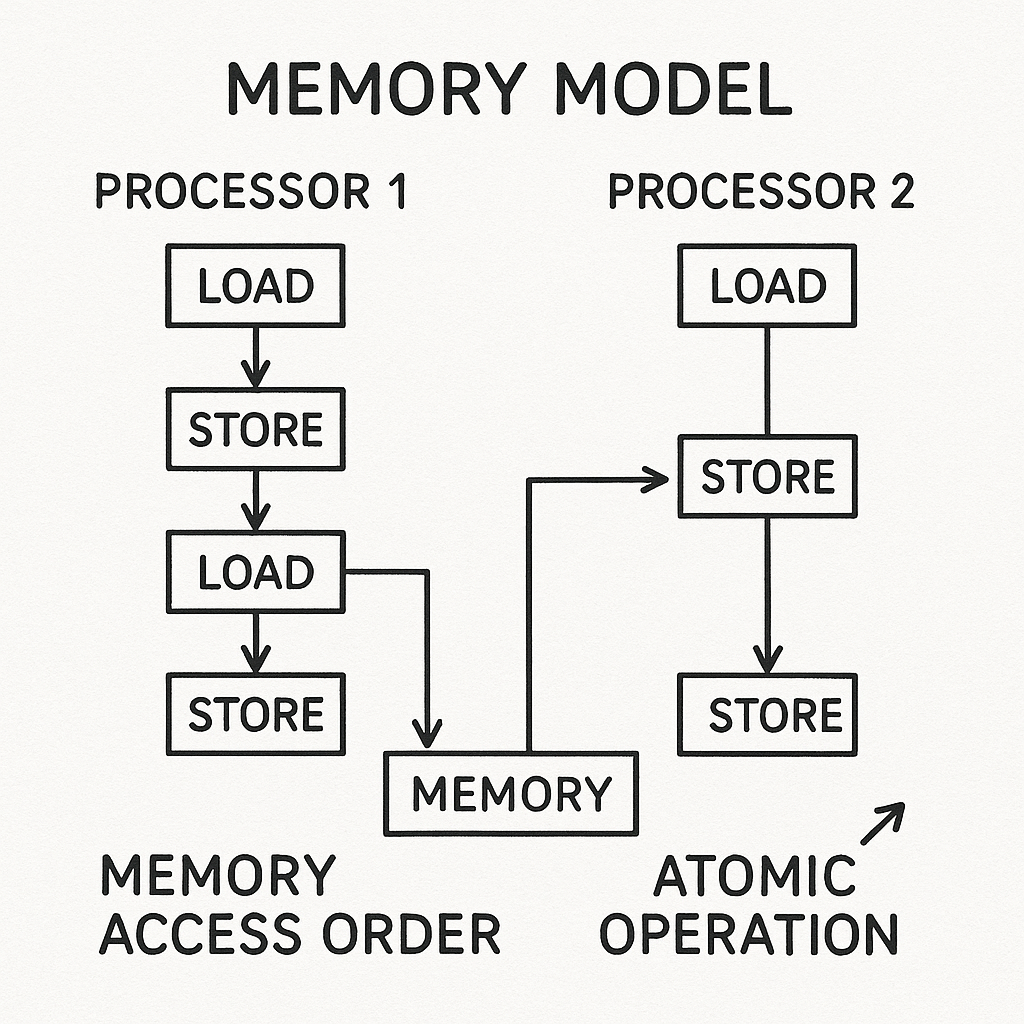

2. Memory access order

Objects are actually memory regions, so when we access the objects, we actually access memory locations. In that case, we can either do read (load) or write (store) memory. But what is the actually order those memory region can be accessed? there are some point of view about orders:

- The written code order (that’s what we wrote).

- The compiler generated code order (the machine code generated by the compiler).

- And the CPU instruction execution order.

Those order can be same, or (mostly) different. Let’s get some examples:

1

2

3

4

5

6

void func(int &x, int&y)

{

x += 1;

y += 10;

x += 2;

}

In this case, the compiler can reorder the instructions to make the code more efficient, for example it can do: x+=3 and y+=10 as long as the result is same. Another example:

1

2

3

4

5

6

void func(int &x, int&y)

{

x += 1;

y = 10 + x;

x += 2;

}

Now the compiler can’t reorder statements due to the dependency of x in y. But when the CPU executes generated instructions, it can execute in different orders. One of the popular is called out of order execution, in short, the CPU may execute loads/stores/ALU operations out of order to improve performance. For example:

1

2

3

4

5

6

mov eax, [var1] ; [1]: load variable var1 into reg eax

inc eax ; [2]: eax += 1

mov [var1], eax ; [3]: store reg eax into var1

xor ecx, ecx ; [4]: ecx = 0

inc ecx ; [5]: ecx += 1

add eax, ecx ; [6]: eax = eax + ecx

The CPU can do step [1] to load the var1, while loading, it could issue some of the later instructions such as [4] and 5 too to set the ecx. Once step [1] is ready [2], [3] and finally [6] will be executed. So the CPU only issues a load operation, doesn’t wait, and do other task, that can avoid idling state and maximize the performance.

Those examples prove one point that the code we write can be different with the final instructions executing by the CPU. The CPU, compilers were free to reorder, cache, or optimize variables without any rules about multithreading. That’s totally fine — because to your single thread, results are the same. The problems come out when we start working in multithreading, there’s no general rules for compilers or CPUs to do that, and neither of them know about different threads. And we need to tell them, that’s actually what C++ 11 provide: atomic operations and locking.

3. Memory model since C++11

So far as we know, everything hinges on memory locations. If multithread access to the same location, and at least one try to modify, there’s a potential of race condition. To avoid that, there has to be an enforced ordering between the accesses in the threads. For example, there could be a fixed order, where one thread always run first, or whatever, just make sure there is some defined ordering.

One way to guarantee the order is locking with mutexes. When two threads try to lock the same mutex, one will be blocked until the other unlock it. So we can do the memory access while the mutex is locking without worrying about other’s access.

The other way is to use synchronization properties of atomic operations, either on same or different memory region, accessing to those regions is enforced an ordering.

3.1. Atomic operations in compiler and CPU perspectives

In multithread environment, if the object isn’t a atomic type, you are responsible for making sure that there’s a sufficient synchronization to guarantee that threads are agree on the modification order of each variable. But if you do use atomic operations, the compiler is responsible for ensuring the right synchronization is in place. Let’s take a look at this example, to see how the compiler treats a atomic variable compare with a normal variable.

1

2

3

4

5

6

7

Coder view Compiler assembler view

┌────────────────────┐

│ int counter = 0; │ ┌─────────────────────────────────┐

│ f() { │=====>│ mov eax, [counter] │

│ counter++; │ │ add eax, 1 │

│ }; │ │ mov [counter], eax │

└────────────────────┘ └─────────────────────────────────┘

The compiler sees no synchronization and might assume that, only that single thread access this variable, it can be kept in a register and even can do some reorder load/store to make the code more efficient. Now let’s see how it assembles an atomic variable (in a x86 machine):

1

2

3

4

5

6

7

Coder view Compiler assembler view

┌─────────────────────────┐

│ std::atomic<int> = 0; │ ┌─────────────────────────────────┐

│ f() { │=====>│ lock add dword ptr [counter], 1 │

│ counter++; │ └─────────────────────────────────┘

│ }; │

└─────────────────────────┘

The lock prefix tell the CPU: This instruction must be atomic across all cores and flush necessary store buffers. Now the responsible is pushed to the CPU and hardware to guarantee no interleaving from other thread. The C++ memory model defines an abstract machine layer to archive independence from any specific CPU, but the feature might not always available on every CPU. And the memory accessing order rules are dependent on the machine too, some might very strictly compare with other. But because it’s actually taken care by C++ hardware abstraction layer, this blog will not dig into features of each machine architecture. In a application view, C++ provides us some kind of memory order that we will dive deep into in the few next sections.

3.2. C++ atomic standard library

The std::atomic<> class template provide a subset of atomic operations. Atomic types are not copyable or assignable. However they support assignment from and implicit conversion to corresponding built in types. There are three types of operations:

- Load operations.

- Store operations.

- Read-modify-write operations.

You can either do it by using methods: load(), store(), exchange(), fetch_add(), fetch_or(), compare_exchange_weak(), compare_exchange_strong() or compound assignment operators: +=, -=, ++, --, etc. if appropriate. There is no different between them, except with methods, you can specify the memory order you want to enforce the compiler too.

3.3. Storing a new value (or not) depending on the current value

Sometimes you want to check the atomic value as a condition to decide what to do with it. For example:

1

2

3

4

5

6

7

8

9

10

std::atomic<int> x = 0;

void func(int y)

{

if (x == 0)

{

// Do something.

x += y;

}

}

The problem with this code is, even if working on the x variable is safe, but the logic can be wrong. Multithread can run over the if condition with x = 0, and the x can be added multiple times with y. To avoid that situation, the compare and swap (known as CAS) operations can be used, and in C++, we can do by using compare_exchange_*(expected, desired) methods.

Both compare_exchange_weak() and compare_exchange_strong() do compare the atomic value with a expected value and stores the supplied desired value if they are equal. If the operation fail due to not equal, the expected value is loaded as the atomic current value. The different is that compare_exchange_weak() storing can be false even if the expected value is equal. That normally due to lacking single instruction of the architecture to do CAS, and the CPU can not guarantee the operation is atomic (for example, thread context switch inside CAS operation), in that case the atomic value is kept as original. So it should be used in a loop:

1

2

3

4

bool expected=false;

extern atomic<bool> b;

while(!b.compare_exchange_weak(expected,true) && !expected);

The compare_exchange_strong() actually contains a loop inside itself.

3.4. Enforcing ordering

Before understand how the atomic operations can enforce memory access order, let’s define the memory model relations: happens before and synchronize with relationships.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

int data = 0;

std::atomic<bool> data_ready(false);

void reader_thread()

{

while(!data_ready.load()) // <-- [1]

{

std::this_thread::sleep(std::chrono::milliseconds(1));

}

std::cout << "Data: " << data << "\n"; // <-- [2]

}

void writer_thread()

{

data = 10; // <-- [3]

data_ready.store(true); // <-- [4]

}

The write of the data in [3] happens before the write to the flag data_ready in [4]. And the read of the flag data_ready in [1] happens before the read of the data in [2]. When the read of the flag data_ready in [1] is true, and now the write in [4] actually synchronize with the read in [1]. In short, [3] happens before [4], 4 happens before [1], [1] happens before [2]. By this happen before relationship we have an enforced ordering: the write of the data happens before the read of data.

That works fine, and that actually is the default behavior of memory ordering of atomic operations: Sequential consistency or you can specify it explicit by using std::memory_order_seq_cst. This guarantees the execution order of a program in the way you wrote it. So why we need other types of memory ordering? well, everything comes at a price, the std::memory_order_seq_cst is the safest but take the highest cost, and sometimes we don’t need to protect the whole memory barrier around the atomic operations.

1

2

3

4

5

6

7

// Do we always need this part finishing before we perform the atomic operation?

/\ /\ /\

----------Before memory barrier----------

lock add dword ptr [counter], 1 // Atomic operation.

----------After memory barrier-----------

\/ \/ \/

// Do we always need this part have to run only after we finish the atomic operation?

3.4.1. Acquire - release ordering

Back to the previous example, there are 2 atomic operations:

[1]data_ready.load()that load the atomic value and then do some thing.[4]data_ready.store(true);the do some thing and the store the atomic value.

In the first case [1] we don’t care what happen before, that is where acquire ordering useful. We only need instructions after the atomic operation must be executed after the atomic operation, compiler, CPU are not allowed to reorder them to before the operation. For example:

1

2

3

data += 10;

data_ready.load(std::memory_order_acquire);

data += 20;

Are the compiler or CPU is able to reorder like:

1

2

3

// data += 30; Can not re order to here.

data_ready.load(std::memory_order_acquire);

data += 30;

Similarly, in the [4] case we only need to ensure instructions before the store operations should be done first. For example:

1

2

3

data += 10;

data_ready.load(std::memory_order_release);

data += 20;

Are the compiler, CPU are able to reorder like:

1

2

3

data += 30;

data_ready.load(std::memory_order_release);

// data += 30; Can not re order to here.

3.4.2. Relaxing memory ordering

This is the simplest form with lowest cost. You don’t care about the ordering in both side of the memory barrier of the atomic operations. For example, a single object like statistic, counter, etc. You just care about the object, that’s it. Compiler can reorder memory around for efficiency.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

std::atomic<int> total_events = 0;

void func()

{

// Other logics are not my business.

x += 1;

y += 10;

total_events.fetch_add(1, std::memory_order_relaxed);

// Other logics are not my business.

x += 2;

y += 20;

}

The compiler are free to optimize the code around the atomic operation, the CPU are free too run instruction too. For example, it could be:

1

2

3

x += 3;

total_events.fetch_add(1, std::memory_order_relaxed);

y += 30;

3.4.3. Summary

Atomic operations can be treated as a memory boundary by the compiler. The memory barriers before and after the atomic operations can be treated differently based on the ordering type.

1

2

3

4

5

/\ /\ /\

----------Before memory barrier----------

lock add dword ptr [counter], 1

----------After memory barrier-----------

\/ \/ \/

| Memory order | Prevents reordering before → after | Prevents reordering after → before | Typical compiler barrier inserted |

|---|---|---|---|

relaxed | ❌ | ❌ | none |

acquire | ❌ | ✅ | acquire fence after load |

release | ✅ | ❌ | release fence before store |

acq_rel | ✅ | ✅ | full fence around op |

seq_cst | ✅ | ✅ | strongest fence (full compiler + CPU fence) |

4. Bonus: Atomic for user-defined types

The atomic type std::atomic<T> is only work for types T that are trivially copyable (a type is considered as trivially copyable if its value can be copied simply by copying their underlying bytes, similar to how std::memcpy operates). That means no move, copy constructors, assignments, no virtual methods, destructor, etc. A type can be check as trivially copyable or not with std::is_trivially_copyable_v<T>.

5. Bonus: new C standard

C11 has introduced mechanisms to perform atomic operations and memory model under stdatomic.h header.